This is the third of a series of articles on the human rights implications of artificial intelligence (AI) in the context of Southeast Asia. In the last article, we had discussed the implications of AI on economic, social, and cultural rights, driving home the point that AI does yield developmental benefits, if it is implemented properly. The human rights concerns were more on AI safety and unintended consequences.

In this article, we are going to take a closer look on what can happen when AI is weaponised and used against civil and political rights (CPR) such as the right to life and self determination, as well as individual freedoms of expression, religion, association, assembly, and so on. Within the space of this article, it is impossible to cover the entire extent to which AI can be used against CPR, so we will only address three imminent threats: mass surveillance by governments, microtargeting that can undermine elections, and AI-generated disinformation. For those who are interested to dig deeper, a report by a collection of academic institutions on the malicious use of AI endangering digital, physical, and political security makes for a riveting read.

AI used for Government Surveillance

Privacy is a fundamental human right, and the erosion of privacy impacts on other civil freedoms such as free expression, assembly, and association. In Southeast Asia, where most countries tilt towards the authoritarian side of the democratic spectrum, governments have shown that they can go to great lengths to quench political dissent, such as wielding draconian laws or using extralegal measures to intimidate dissenters.

With machine learning that can sift through mountains of data collected inexpensively and make inferences that were previously invisible, surveillance of the masses becomes cheaper and more effective, making it easier for the powerful to stay in power.

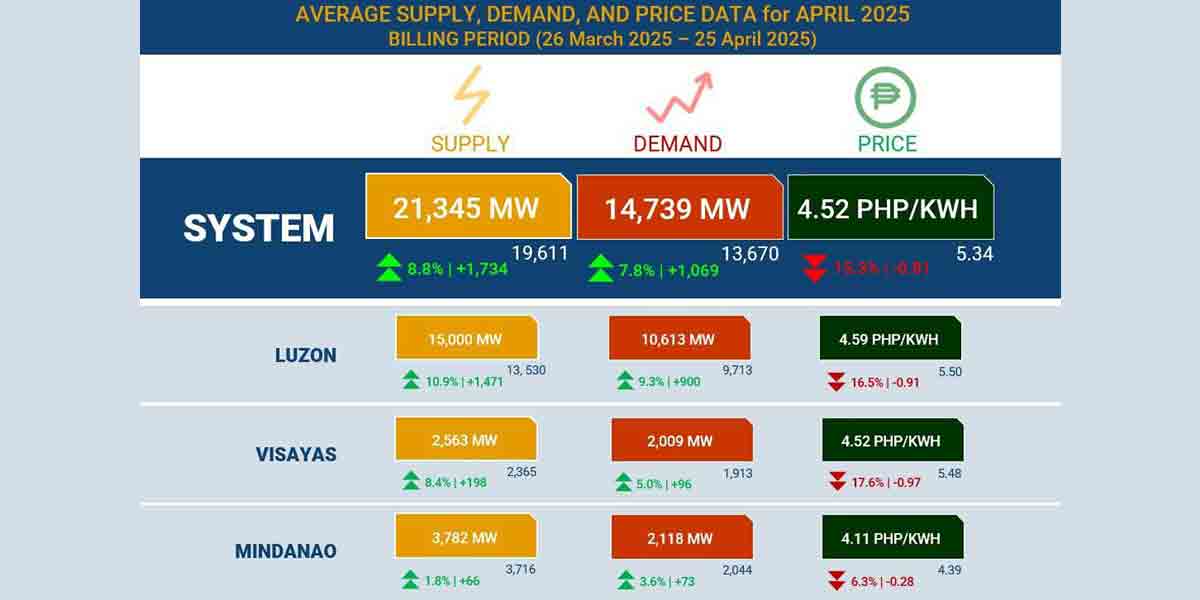

The table below is adapted from the AI Global Surveillance Index (AIGS 2019), extracting the seven Southeast Asian countries covered (with no data on Brunei, Vietnam, Cambodia, and Timor Leste). It shows that most countries within the region use two or more types of surveillance technologies in the form of smart/safe city implementations, facial recognition, and smart policing; and all of these countries use technologies imported from China, and to a lesser extent from the US as well.

To provide a wider context, at least 75 out of 176 countries covered in the AIGS 2019 are actively using AI for surveillance purposes, including many liberal democracies. The index does not differentiate between legitimate and unlawful use of AI surveillance. Given the context of Southeast Asia, civil society in the region might want to err on the side of caution. A CSIS report points out that Huawei’s “Safe City” solutions are popular with non-liberal countries, and sounds the concern that China may be “exporting authoritarianism”. China itself has used facial recognition (developed by Chinese AI companies Yitu, Megvii, SenseTime, and CloudWalk) to profile and track the Muslim Uighur community—it is also known that close to a million Uighurs have been placed in totalitarian “re-education camps”, illustrating the chilling possibilities of human rights violations connected to mass surveillance.

Other forms of government surveillance include social media surveillance and using AI to collect and process personal data and metadata from social media platforms. The Freedom on the Net (FOTN) Report (2019) states that 13 out of the 15 Asian countries that it covers have a social media surveillance programme in use or under development, but does not specify which. The odds are high for these eight Southeast Asian countries covered in the report: Philippines, Malaysia, Singapore, Indonesia, Cambodia, Myanmar, Thailand, and Vietnam.

In particular, examples of Vietnam and the Philippines were highlighted in the FOTN report. In 2018, Vietnam “announced a new national surveillance unit equipped with technology to analyse, evaluate, and categorise millions of social media posts”; and in the same year, Philippine officials were trained by the US Army on developing a new social media unit, which reportedly would be used to counter disinformation by terrorist organisations. Digital surveillance by the government can also cast a wider net outside social media platforms. In 2018, the Malaysia Internet Crime Against Children (Micac) unit of the Malaysian police demonstrated to a local daily its surveilling capabilities of locating pornography users in real time, and that it built a “data library” of these individuals—a gross invasion of privacy, as pointed out by a statement by some ASEAN CSOs.

Microtargeting to change voter behaviour

In Southeast Asia, civil society is more vigilant about government surveillance than corporate surveillance. However, the effects of corporate surveillance, especially by big tech companies like Facebook and Google, may be equally sinister or even more far-reaching when their AI technologies, combined with an unimaginable amount of data, are up for hire to predict and change user behaviour for their advertisers. This is particularly problematic when the advertisers are digital campaigners for political groups looking to change public opinions or voter behaviour, affecting the electoral rights of individuals.

Five years ago, researchers had already found that, based on Facebook likes, machines could know you better than anyone else (300 likes was all it needed to know you more than your spouse did, and only 10 likes to beat a co-worker). Since then, there have been scandals such as that of Cambridge Analytica, which illegally obtained the data of tens of millions of Facebook users, which they were able to use to create psychographic profiles from, so that they could microtarget voters with different messaging to swing the votes of the 2016 US presidential election. Similar tactics allegedly influenced the outcomes of the Brexit referendum.

Cambridge Analytica has since closed down, but the scandal had brought the business model of microtargeted political advertising into public scrutiny. Political commentators have pointed out that Facebook does pretty much the same thing, only in a bigger and more ambitious way. With the data of 2 billion users at their disposal, Facebook is able to train its machine learning systems to predict things like when an individual user is about to switch brand loyalty, and rent this user intelligence to whoever that pays. It does not discern between regular advertising and political advertising. It is worth mentioning that Twitter has banned political advertising, and Google has disabled microtargeting for political ads.

86% of Southeast Asia’s Internet users use Facebook. Within the region, concerns have already arisen regarding microtargeting to influence elections. A report that tracks digital disinformation in the 2019 Philippine midterm election points out that Facebook Boosts (Facebook’s advertising mechanism) are essential for local campaigns because of their ability to reach specific geographical locations. Besides advertising the Facebook pages of official candidates, Facebook Boosts were also used to promote negative content about political opponents. In Indonesia, ahead of the 2019 general elections, experts warned of voter behavioural targeting and voter microtargeting strategies which might exploit personal data of Indonesian voters to change election outcomes.

AI-generated content fuels disinformation campaigns

In the digital era, rumour-mongering becomes much more effective because of the networked nature of our communication. As a result, disinformation or fake news has become a worldwide problem, and in Southeast Asia, the gravest example of possible consequences is the ethnic cleansing of Rohingyas in Myanmar, reportedly fueled by the spread of disinformation and hate speech on social media.

The disinformation economy has flourished within the region. In Indonesia, fake news factories are used to churn out content to attack political opponents and to support their clients. PR companies in the Philippines cultivate online communities and surreptitiously insert disinformation and political messaging with the help of micro and nano influencers. As nefarious actors establish structures to create and profit from disinformation, AI will make content generation much easier and more sophisticated for them.

One of the scariest possibilities of AI-generated content is the so-called “deepfake”, also known as “synthetic media”, which are manipulated videos or sound files which look/sound highly realistic. Deepfakes are already a reality and it is a matter of time (in the matter of months) before they are not discernible from real footage and cheap enough to be produced by any novice. At the moment, they are mainly being used to produce fake pornography of celebrities, but there is a dizzying array of possibilities of how it could be used for creating disinformation. In the region, there is at least one case in Malaysia of a politician claiming his sex video to be a politically motivated deepfake.

[Insert Video] https://www.youtube.com/watch?v=u2lX70U7N0c

The video above gives a good introduction of what deepfakes are, some examples, and why we should be concerned. WITNESS has a good resource pool of articles and videos of deepfakes for those who are interested to dig further.

Another example of what AI is able to do in terms of generating realistic content can be found in the interactive component of this New York Times article—with a click of a button, one can generate commentary on any topic, with any political slant. As can be imagined, the cost of maintaining a cyber army for astroturfing or trolling goes down drastically if machines are used to generate messages that look like they have been written by humans. In a separate report that warns of AI-generated “horrifyingly plausible fake news”, a system called GROVER can generate a fake news article based on only a headline, which can even be customised to mimic styles of major news outlets such as The Washington Post or The New York Times.

The post-truth era has many faces. Lastly, you can have a bit of fun and check out ThisPersonDoesNotExist.com or WhichFaceIsReal.com to see the level of realism in computer-generated photos of human faces, which makes it easy to generate photos for fake social media profiles.

In Conclusion

As has been demonstrated by this article, AI can be, and has been, weaponised to achieve ends that are incompatible with civil and political rights. At the very least, civil society within the region should invest energy and resources into following technological trends and new applications of AI so that it will not be taken by surprise by innovations from malicious actors. As is the nature of machine learning and AI, it is expected that the efficacy of the technologies will only get better. Civil society and human rights defenders will need to participate in the discussions of AI governance and push for tech companies to be more accountable towards possible weaponisation of the technologies that they have created, in order to safeguard human rights globally.

Dr. Jun-E Tan is an independent researcher based in Kuala Lumpur. Her research and advocacy interests are broadly anchored in the areas of digital communication, human rights, and sustainable development. Jun-E’s newest academic paper, “Digital Rights in Southeast Asia: Conceptual Framework and Movement Building” was published in December 2019 by SHAPE-SEA in an open access book titled “Exploring the Nexus Between Technologies and Human Rights: Opportunities and Challenges in Southeast Asia”.